Research

5 Amazing Google Ads Tools You Need to Use

Google Ads can be overwhelming in its scope.

It’s a versatile and competitive medium, and the wealth of tools can create two situations:

- It feels like too much to learn, so new things are avoided.

- The tools aren’t easy to spot.

As the platform continues to evolve, so do the tools Google provides!

Some save time, some give you insights faster, and others can help expand your Google Ads efforts in ways that are automated or backed by data.

Here are five tools in the Google Ads platform to at least give a shot if you haven’t.

Some are newer, some are oldies but goodies, and all are worth giving a shot.

1. Ad Variations

As most PPCers will tell you, ad testing is one of the core best practices to get the best performance possible.

Ad testing was a more frustrating experience once upon a time. While you could create multiple versions of a text ad, many buyers felt like Google would pick a winner too fast.

It was also labor-intense to create several versions, pull reporting at scale, and generally get insights fast.

The ad creative types in search have evolved since that time, and so have the tools for making them.

The original text ad format changed to expanded text ads, providing more space to test copy.

Google also launched RSAs (responsive search ads) to quickly test combinations of copy automatically, and new tools devoted to ad testing started to appear.

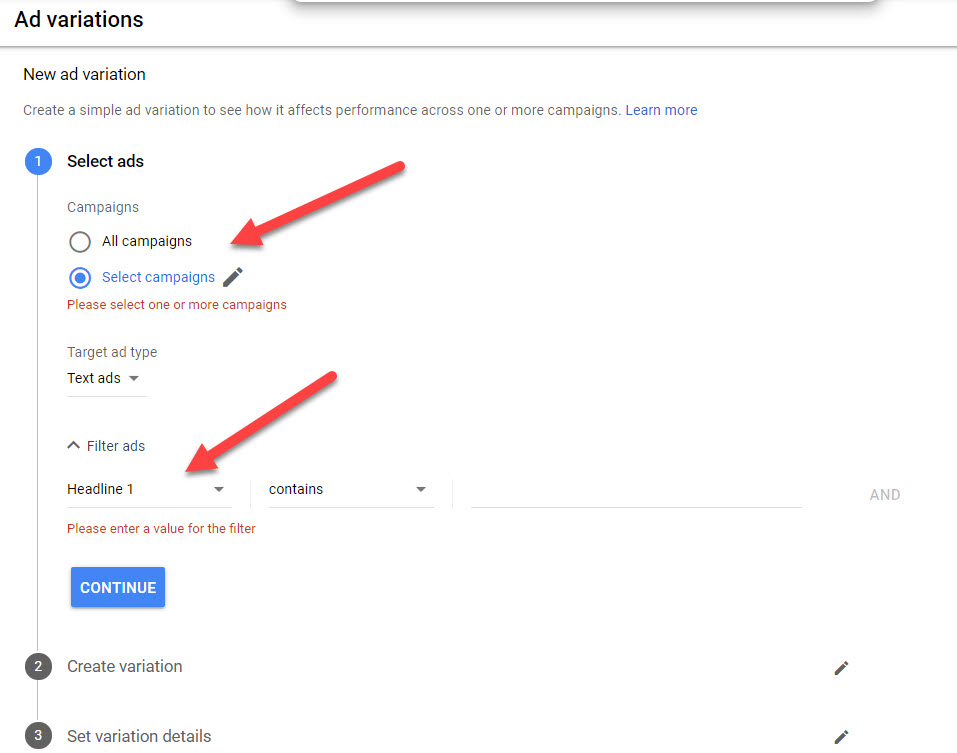

One of these tools is the Ad Variations tool. Located in the Drafts and Experiments area, this feature allows brands to create variations on their ads faster.

Brands can choose to run ad variations for the whole account, specific campaigns, or even a custom scope. Then they specify the part of the ad they want to run the variant for:

Users can then specify the type of variation they want to run, including finding and replacing text, swapping headline order, or updating text altogether.

Once the experiment launches, results are shown and monitored in the Ad Variations area.

2. Audience Observation

For a long time, Google usually lost out to Facebook when advertisers would think of reaching users based on their demographic.

Need to reach female runners in their 40s? While they are certainly searching on Google, there wasn’t an easy way to segment them or see if their searches were more valuable.

This made it difficult for advertisers to understand whether certain audience types performed better, even when searching the same terms as another demographic.

It also limited what advertisers could learn about other possible audiences they could be targeting.

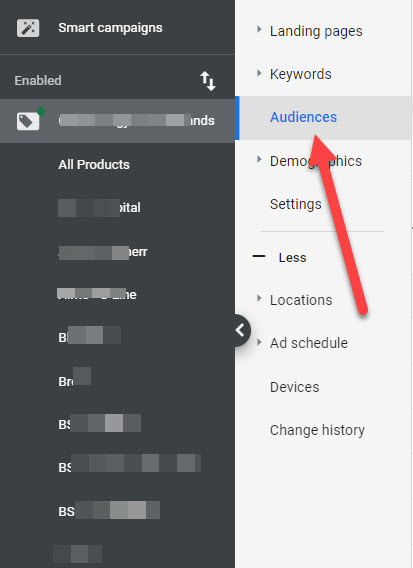

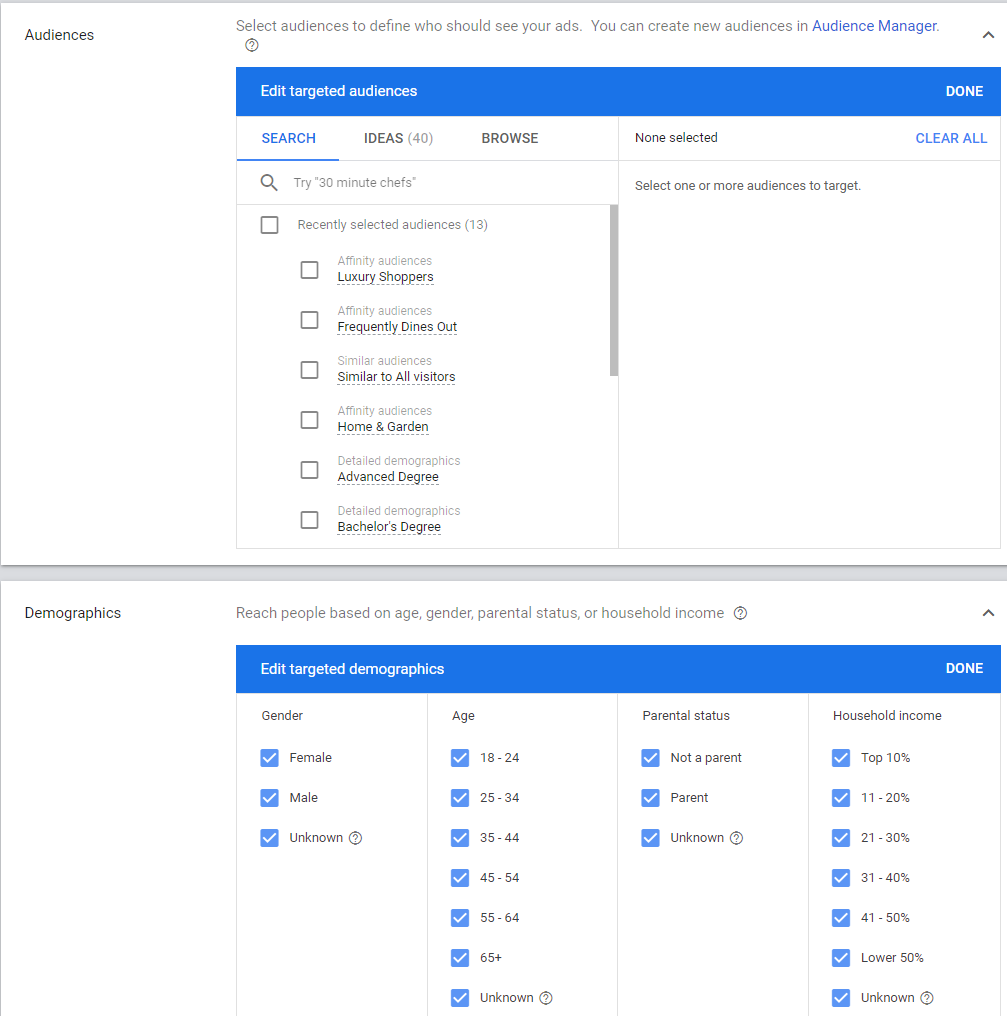

The option to observe Audiences helps close these gaps!

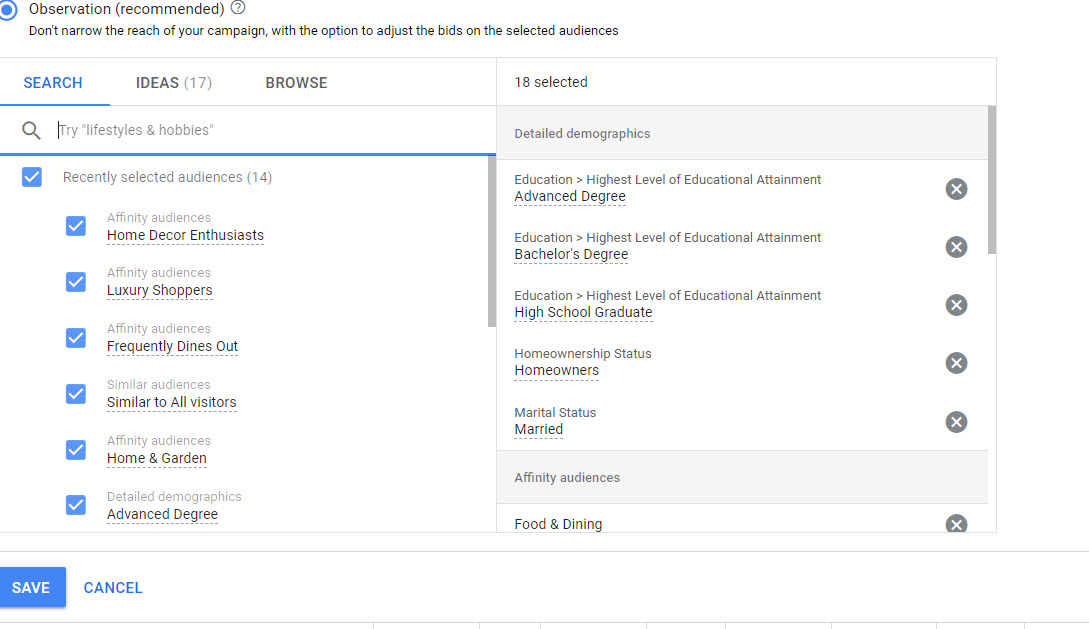

Advertisers can add a host of Google-defined audiences to their efforts to observe their performance relative to one another:

Based on that data, bids can specifically for the demographic.

Think you should be getting better results with your Google Ads?

Your campaign may be suffering from click fraud. Check if you need to protect your ads from competitors & bots. Simple setup. Get a free checkup today.Start Free TrialADVERTISEMENT

Google will automatically suggest Audiences in the “Ideas” section.

This is a good way to jumpstart the process, and advertisers can also search based on things like affinity audiences, in-market buyers, or other demographic markers:

3. Responsive Search Ads

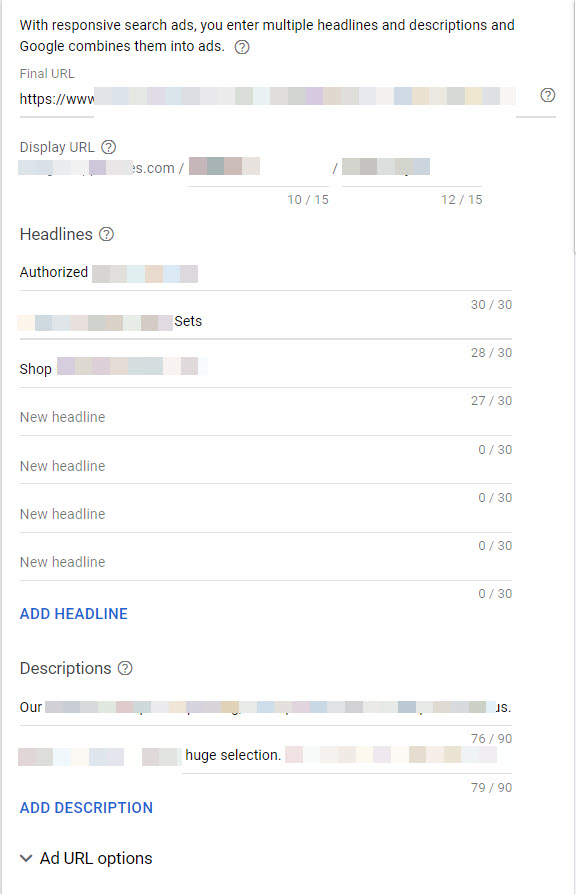

Responsive Search Ads (commonly called RSAs) are another time-saver in ad testing.

Unlike traditional text ads where the advertiser creates separate, distinct versions of ads, RSAs employ a mix-and-match philosophy.

The ad copy is treated as several separate assets that Google then merges together to create a cohesive ad.

Once RSAs are live, clicking on the View assets details link that appears just below the ad copy shows results.

Users can view the individual asset stats and the results by combination of text.

This quick and easy testing setup gives insights about verbiage users respond to best, and lets advertisers test combinations faster than manually creating every version they would need.

One thing to look out for is the inputs an advertiser puts in.

All the copy lines must be able to mix and match without them seeming awkward or weird when they’re put together.

Make sure to think through and vet your copy options with that in mind before you start running them.

4. Discovery Campaigns

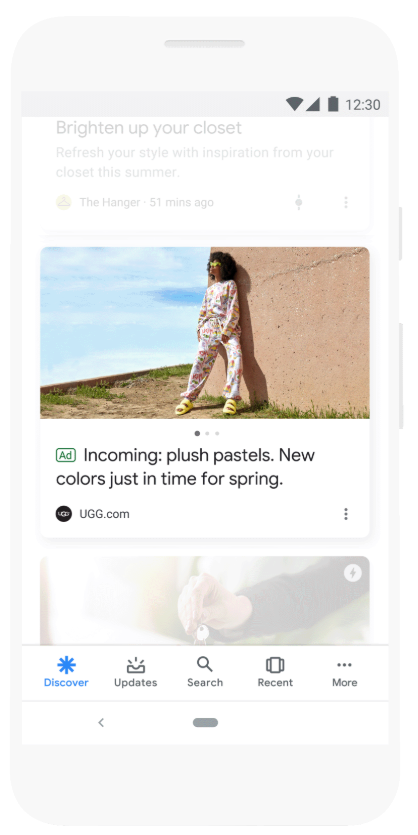

Unlike search, Discovery campaigns are visually-rich ad units shown based on user activity.

They are a departure both in their format, and how they’re targeted.

They are display-like in how they render to the user, combining imagery and a headline.

Also, like display, they are primarily used as a top of funnel way to catch a user’s eye and generate interest in a product.

Discovery ads appear across the Google Discover Feed (shown on the home page of the Google app or the Google.com homepage on mobile), YouTube home feed, and also Gmail.

Targeting uses the familiar interfaces and options for specifying Audiences in their other campaign types:

Discovery Campaigns are largely automated by Google.

Advertisers can’t make the adjustments they’re used to in search for things like device adjustments, ad rotation, or frequency capping.

There are still options for exclusions to help with brand safety, like keeping advertiser content away from things like violence or profanity.

5. Explanations Feature

It’s the bane of a PPC manager’s existence: sudden, unexpected changes in performance.

Diagnosing these instances can lead to a lot of digging, pivot table-ing, and sweating!

The Explanations feature is here to help.

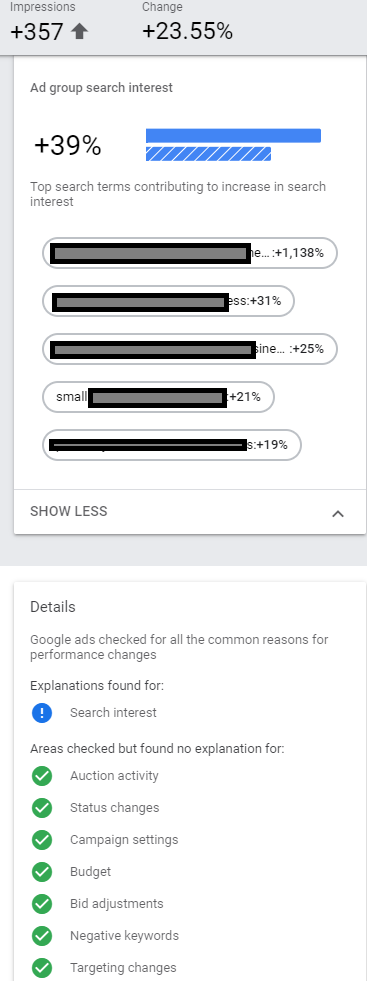

Currently in beta, it provides insights to Search campaigns and why their performance may have changed.

Data can be analyzed for impressions, clicks, costs, and conversions for up to a 90-day window of time.

The Explanations feature works by comparing one date range’s results to another.

Doing this typically shows you a percentage change between the two dates.

If there’s an Explanation available, that difference turns blue like a hyperlink.

Clicking the link will bring up a small window, with the option to go to a larger Explanation.ADVERTISEMENTCONTINUE READING BELOW

It will note the primary driver of the change, and contributing portions.

In this example, there was an increase in impression volume.

The details reveal there was an increase in search volume, and Google outlines the terms that specifically saw the increase:

This is a far cry from looking in search history, digging through search terms manually, sorting, and crunching data.

Source: https://www.searchenginejournal.com/google-ads-tools/374962/#close