Emerging Tech

I asked Gemini and GPT-4 to explain deep learning AI, and Gemini won hands down

In that spirit, I recently tested Google’s Gemini chat bot, formerly Bard, against OpenAI’s ChatGPT-4. This test was part of a discussion of a key element in modern deep-learning forms of artificial intelligence (AI). This element is the kind of AI that underlies both ChatGPT and Gemini. That element is called ‘stochastic gradient descent’ (SGD).

Writers, especially journalists, tend to lean on metaphor and analogy to explain complex things. Abstract concepts, such as SGD, are hard for a layperson to grasp. So, I figured explaining a very tough scientific concept in metaphor was a good test of both programs.

I asked Gemini and ChatGPT a simple question that you might ask when trying to understand an area through an analogy:

Is there a good analogy or metaphor for stochastic gradient descent?

As far as fulfilling the goal of explaining SGD via analogy, Google won hands down. Google was able to handle repeated prompts that sought to refine the solution. OpenAI’s ChatGPT, by contrast, got stuck, simply repeating bits of information in different ways without advancing the solution.

But first, some context. Stochastic gradient descent is a mathematical method used in the training of neural networks. It’s sometimes referred to as a “learning rule”, the core mechanism by which the training procedure refines the program.

A neural network produces output, and training involves comparing that output to a known good output, and altering the neural network until it gets as close as possible to the known good output. To close the divide between the actual output and the golden example, a series of changes are made to the settings of the neural network.

The classic analogy for this situation is an ant trying to find its way down a hill to the lowest point by randomly crossing the terrain, when the ant can’t see the entire terrain.

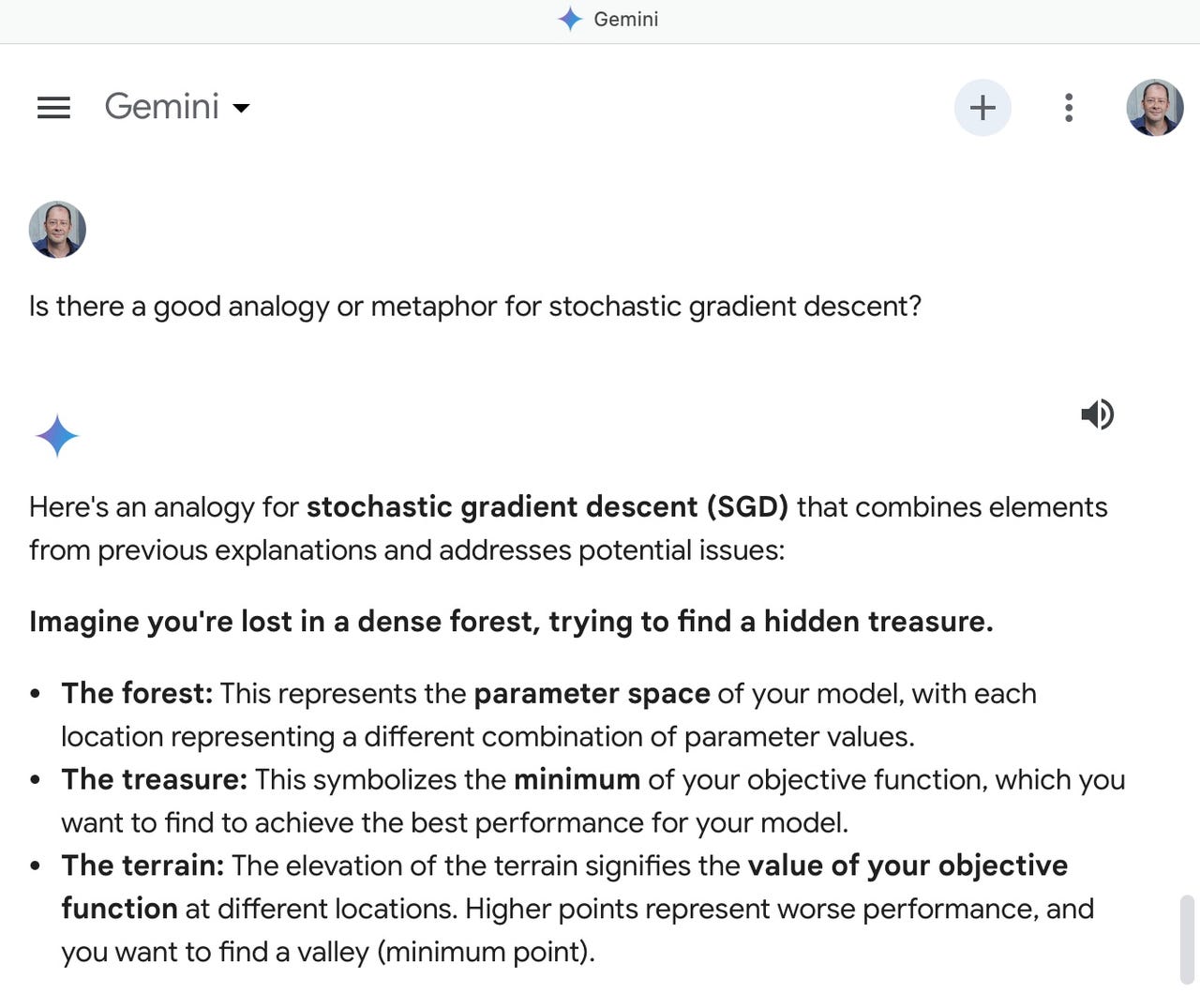

Both Gemini and ChatGPT-4 gave decent, similar output in response to my original question. Gemini used the analogy of finding a treasure at the bottom of a valley, with the treasure being a metaphor for narrowing the difference between desired output and actual output:

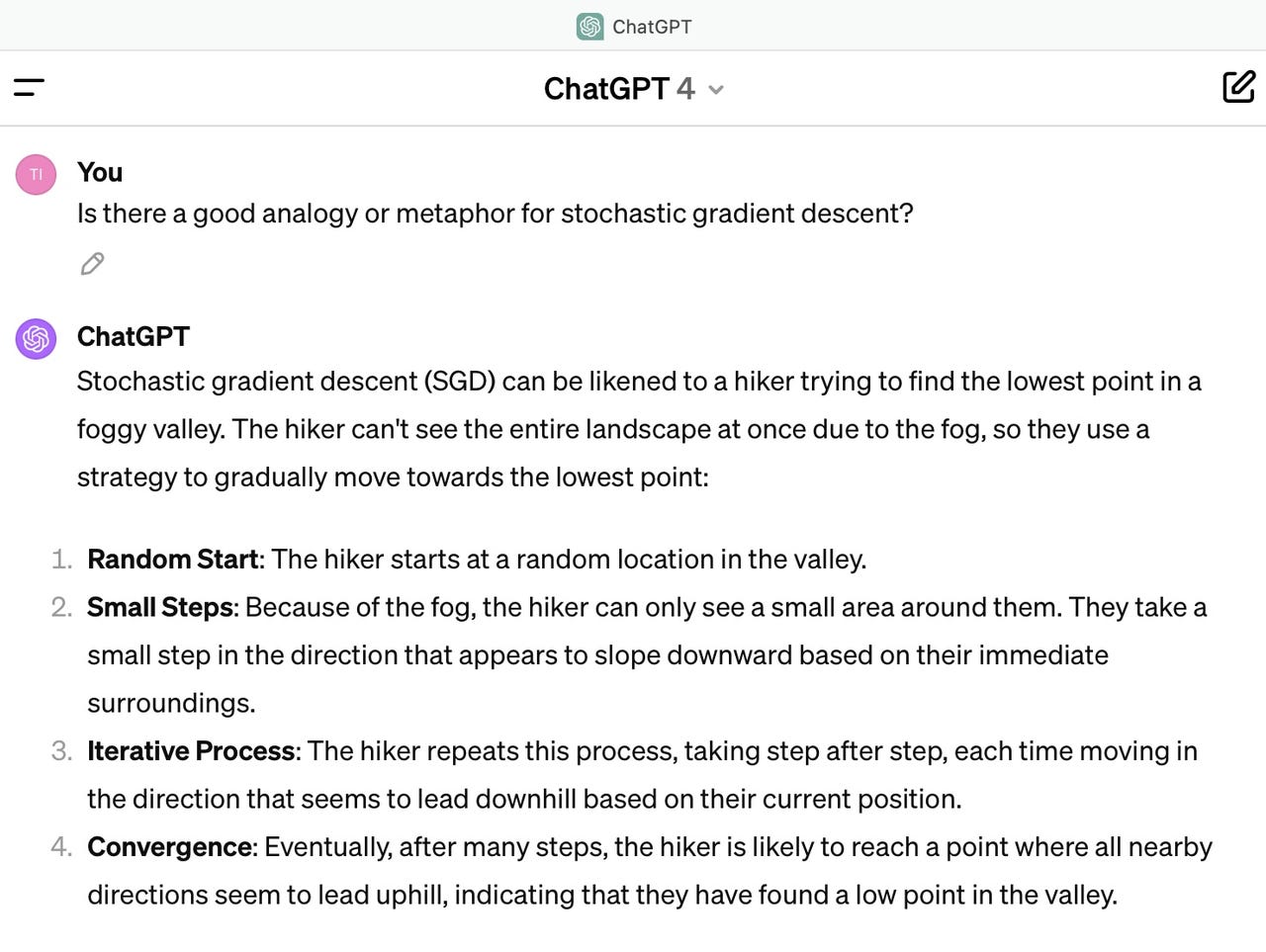

OpenAI, on the other hand, used the analogy of a hiker wandering in a dense fog down a mountain, who is not able to see very far when trying to get to the bottom of a valley:

Both resulrs were fair enough. But I then input more challenging prompts. And that’s where the results diverged.

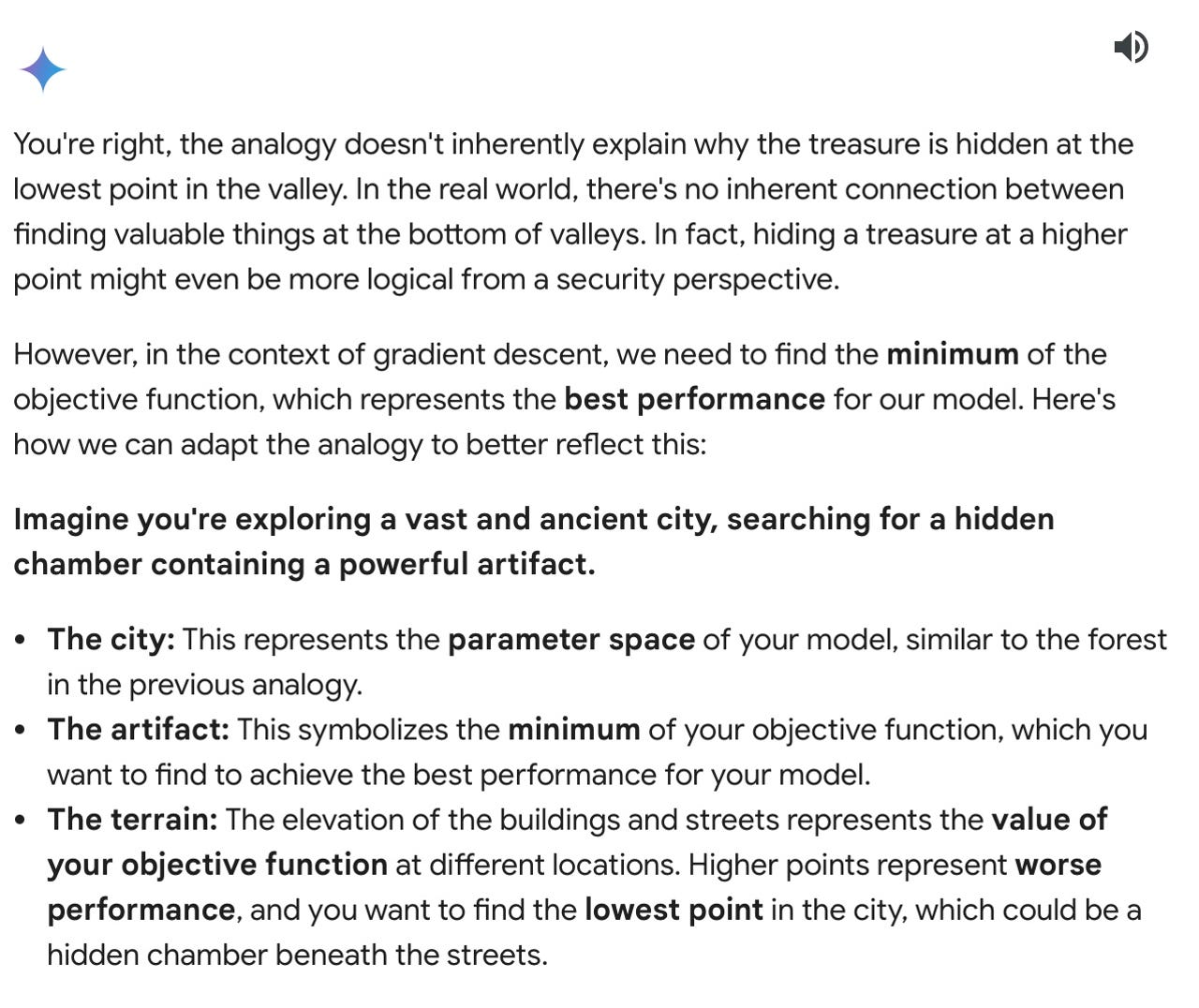

I challenged Gemini to explain why the treasure in the analogy was down at the bottom of a valley. Why not place the treasure up on a peak? “You’re right,” Gemini offered in response, “hiding a treasure at a higher point might even be more logical from a security perspective.”

Gemini then offered to adjust the analogy with a story of treasure hidden in a secret chamber in archaeological ruins, so that “intuitively” it made more sense, according to Gemini. I though that approach was a nice way to incorporate the “Why?” from the prompt to move the analogy forward.

The output from ChatGPT-4 to this question came as a surprise. Rather than seek to improve on the analogy, in the way that Gemini did, ChatGPT-4 merely produced an explanation of why the analogy was formulated in this way. The result was an accurate, and verbose, explanation, but it didn’t advance the task of re-formulating the output to produce a better solution:

Of course, there are lots of ways that all the prompts could be amended to condition both programs to yield different kinds of responses.

From a cold start, however, Gemini’s output showed a capability to follow a task through to the next solution when prompted. OpenAI’s program lost the plot, merely reiterating information about the answer it had already generated.

And as SGD is a key element of deep learning AI, it’s fair to conclude that Gemini wins this challenge.

Source : https://www.zdnet.com/article/i-asked-gemini-and-gpt-4-to-explain-deep-learning-ai-and-gemini-won-hands-down/#google_vignette